This morning I arrived to my office with one idea to develop, and I decided to work on the blackboard that is hung on the wall opposite to where I sit. I seldom use it, but for some reason it seems that writing with coloured markers on that white surface is more thought-inspiring than my usual scribbling on a notebook.

One clear practical advantage of the (white) blackboard is that whenever my train of thoughts hits a dead end or I write some nonsense, I just erase it and start over, keeping the good stuff untouched and still in sight; on the notebook this is not possible, as one needs to turn the page. On the negative side, there is less backward traceability - if I had a good idea and left it alone, it is lost forever.

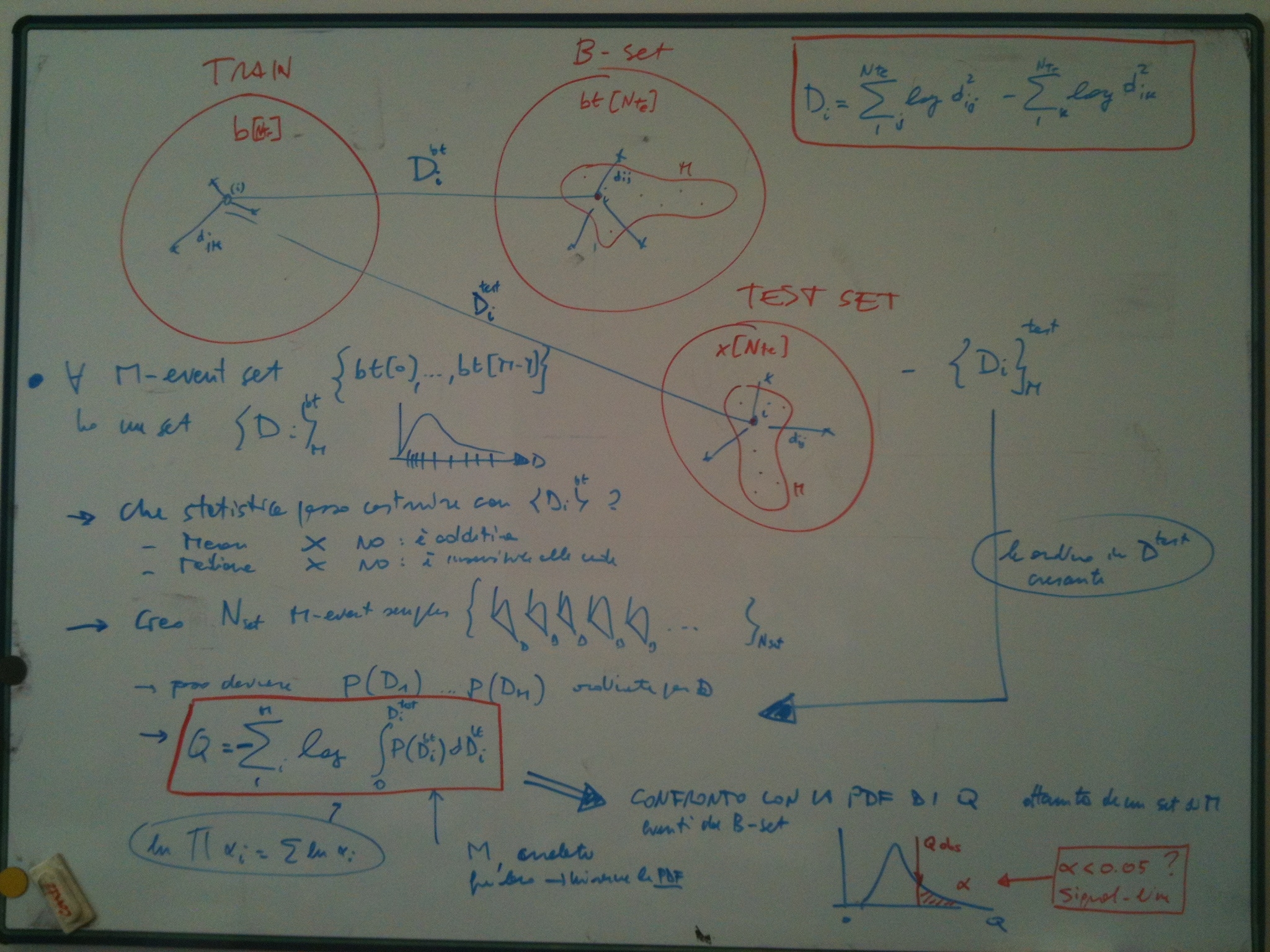

The idea I am trying to develop is that of constructing a goodness-of-fit measure in the multi-dimensional space of many observables -ones, say, that describe the kinematics of a Higgs boson candidate event- in a way that does not suffer from the scarce statistics with which one usually can fill the space.

The latter problem is called "high-dimensionality curse" and there are libraries full of literature on how to deal with it; so I do not expect I am inventing anything today. However, I am hoping that I can design a GoF test that can be used with a Bootstrapping technique I am applying to a rather non-conventional classification problem: the one of detecting a totally unknown signal (one for which no prior knowledge of its distributions in the feature space) amidst a large background which is instead perfectly well-known.

The above problem is quite different from the one of classifying an event as signal or background, when both are known: the latter is an extremely well studied problem and there exist scores of excellent methods to solve it - neural networks, boosted decision trees, fisher discriminants, support vector machines, etcetera, etcetera; while the former has received much less attention, but is interesting in particle physics, where we search for unknown signals!

Ah, by the way: the Bootstrap (invented by Brian Efron in the seventies as a generalization of the so-called "jacknife test") is a quite powerful statistical method based on resampling from a set of data in order to construct estimators for the characteristics of the set.

It would take me much, much longer than a blog post to explain in detail my idea; instead, maybe it is more fun to just expose here the scribblings of today, which are now in front of me as I start to code them in a C++ program. If the test succeeds, it will be another small step toward a goal I have set to myself a while ago, the one of documenting this idea in a paper.

And in case you wondered whether I fear that my idea gets stolen by somebody who then proceeds to publish it before I do: well, no. If it happens, it means it was a good idea after all - and that would already be enough for me to be satisfied !